Amortized supersampling

ACM Trans. Graphics (SIGGRAPH Asia), 28(5), 2009.

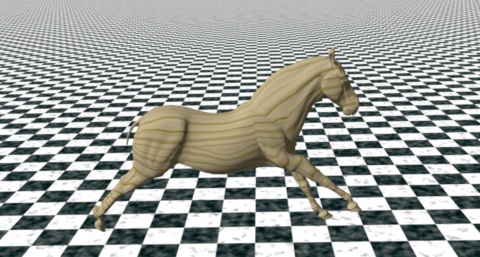

Adaptive reuse of pixels from previous frames for high-quality antialiasing.

Abstract:

We present a real-time rendering scheme that reuses shading samples from earlier time frames to achieve

practical antialiasing of procedural shaders. Using a reprojection strategy, we maintain several sets of

shading estimates at subpixel precision, and incrementally update these such that for most pixels only one

new shaded sample is evaluated per frame. The key difficulty is to prevent accumulated blurring during

successive reprojections. We present a theoretical analysis of the blur introduced by reprojection

methods. Based on this analysis, we introduce a nonuniform spatial filter, an adaptive recursive temporal

filter, and a principled scheme for locally estimating the spatial blur. Our scheme is appropriate for

antialiasing shading attributes that vary slowly over time. It works in a single rendering pass on

commodity graphics hardware, and offers results that surpass 4x4 stratified supersampling in quality, at a

fraction of the cost.

Hindsights:

Some related work is being pursued in the CryEngine 3 game platform,

as described by Anton Kaplanyan in a

SIGGRAPH

2010 course.

It also seems related to the temporal reprojection in

NVIDIA TXAA,

although there are few details on its technology.

See content copyrights.