Image-space bidirectional scene reprojection

ACM Trans. Graphics (SIGGRAPH Asia), 30(6), 2011.

Real-time temporal upsampling through image-based reprojection of adjacent frames.

Abstract:

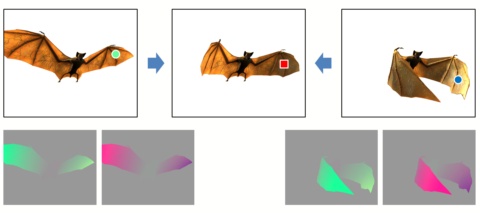

We introduce a method for increasing the framerate of real-time rendering applications. Whereas many

existing temporal upsampling strategies only reuse information from previous frames, our bidirectional

technique reconstructs intermediate frames from a pair of consecutive rendered frames. This significantly

improves the accuracy and efficiency of data reuse since very few pixels are simultaneously occluded in

both frames. We present two versions of this basic algorithm. The first is appropriate for fill-bound

scenes as it limits the number of expensive shading calculations, but involves rasterization of scene

geometry at each intermediate frame. The second version, our more significant contribution, reduces both

shading and geometry computations by performing reprojection using only image-based buffers. It warps and

combines the adjacent rendered frames using an efficient iterative search on their stored scene depth and

flow. Bidirectional reprojection introduces a small amount of lag. We perform a user study to investigate

this lag, and find that its effect is minor. We demonstrate substantial performance improvements (3-4X)

for a variety of applications, including vertex-bound and fill-bound scenes, multi-pass effects, and motion

blur.

Hindsights:

One nice contribution is the idea of using an iterative implicit solver to locate the source pixel

given a velocity field.

In the more recent project

Automating image morphing

using structural similarity on a halfway domain,

we adapt this idea to evaluate an image morph directly in a pixel shader,

without requiring any geometric tessellation, i.e. using only “gather” operations.

See content copyrights.