Appearance-space texture synthesis

ACM Trans. Graphics (SIGGRAPH), 25(3), 2006.

Improved synthesis quality and efficiency by pre-transforming the exemplar.

Abstract:

The traditional approach in texture synthesis is to compare color neighborhoods with those of an exemplar.

We show that quality is greatly improved if pointwise colors are replaced by appearance vectors that

incorporate nonlocal information such as feature and radiance-transfer data. We perform dimensionality

reduction on these vectors prior to synthesis, to create a new appearance-space exemplar. Unlike a texton

space, our appearance space is low-dimensional and Euclidean. Synthesis in this information-rich space

lets us reduce runtime neighborhood vectors from 5x5 grids to just 4 locations. Building on this unifying

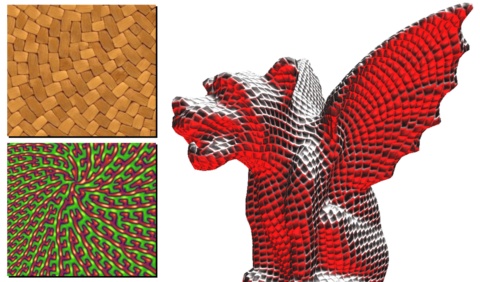

framework, we introduce novel techniques for coherent anisometric synthesis, surface texture synthesis

directly in an ordinary atlas, and texture advection. Remarkably, we achieve all these functionalities in

real-time, or 3 to 4 orders of magnitude faster than prior work.

Hindsights:

The main contribution of this paper is sometimes misinterpreted:

it is not the use of PCA projection to accelerate texture neighborhood comparisons (which is

already done in [Hertzmann et al 2001; Liang et al 2001; Lefebvre and Hoppe 2005]);

rather it is the transformation of the exemplar texture itself (into a new appearance space) to let each

transformed pixel encode nonlocal information.

See Design of tangent vector fields for interactive painting of oriented texture over a surface.

Additional results:

- Closeups of figures from the paper

- Comparison of results with [Lefebvre and Hoppe 2005]

- Results of surface texture synthesis

- Results of anisometric texture synthesis: set 1 set 2

- Results of texture synthesis advection: A B C D

- Example of advecting synthesized texture on the horse mesh

See content copyrights.